If you got here via a link to the slides

Please check out the full git repository for the presentation notebook as well as other example notebooks.

https://github.com/jbarratt/ipython_notebook_presentation/tree/linuxcon

Outline

- What is the notebook

- OMG it's the best

- Quick Demo

- IPython Ecosystem (2.0 Caveat)

- Why is it awesome

- Literate Code

- Sharing

- Rapid Prototyping/Exploring/Learning

Terminal(Editor <-> Renderer) -> Emailvs Cyclic

- Blogging

- Workflows (edit, share, publish)

- Under the hood

ipynb - HTML

- Gist + nbviewer

- Under the hood

- Demos

- Code Mentorship (sets, objects, katas)

- Runbooks

- Log analysis epic

- Shell scripting demo

- Churn analysis

- Latency Heatmap

- Extending

- It came from inside the presentation!

What is the notebook?

A "browser-based interactive computing environment"

Why are we talking about it today?

- Extremely useful tool, but not well enough known outside Academia/Science.

- Makes the (powerful) python data/science/module ecosystem even more powerful

- If you code, (even not in python), sysadmin, write documentation, blog, do any analysis or visualization, you might get a lot out of the notebook.

Why are you talking about it today?

I fell in love.

Apologies to my beautiful baby daughter for photoshopping her out

Why It's Great

These attributes will come up over and over as we explore this tool.

"Literate"

The other attributes will be clear, but a word on Literate (apologies to Knuth for the oversimplification)

This is a big part of where the title comes from: it's about the story more than the software. (Because of inline output, Notebook may even be 'SuperLiterate'.)

Update: IPython's founder, @fperez_org kindly pointed me to a blog post of his; they prefer the term Literate Computing.

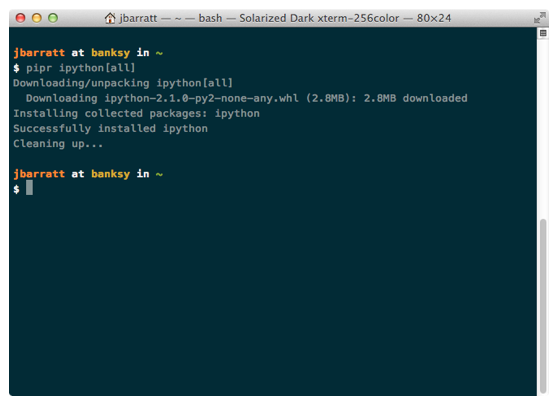

Enough Meta: Let's Install It.

pip install ipython[all]? That was easy.*

*Dependencies can be painful, YMMV.

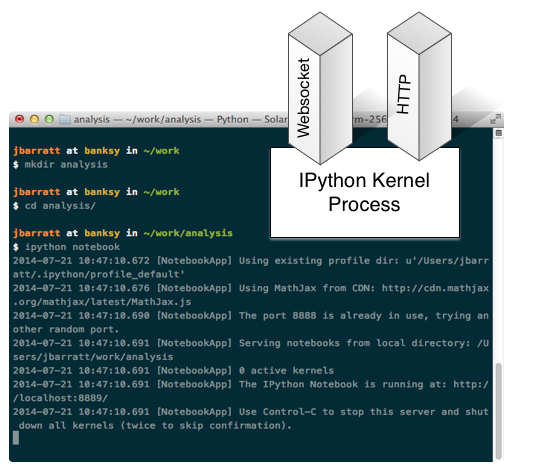

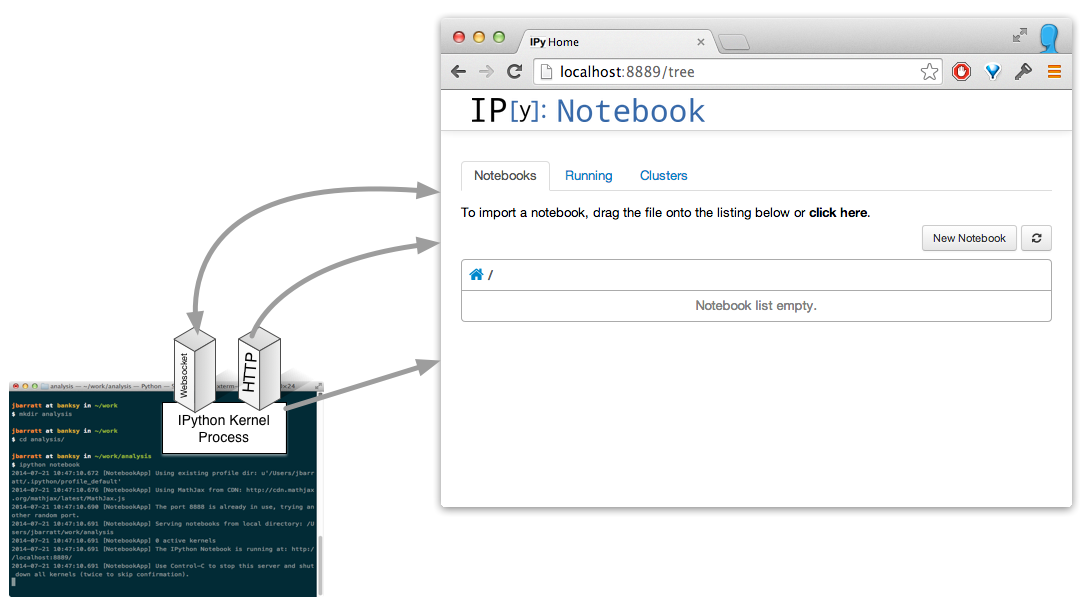

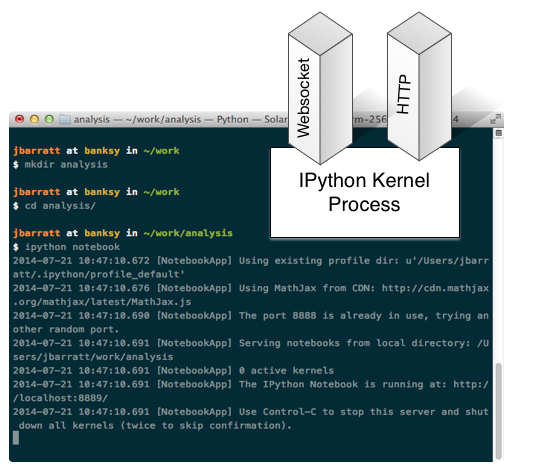

Run 'ipython notebook'

Clarification: As @fperez_org pointed out, the kernel actually only speaks zeromq, the notebook process handles browser communications.

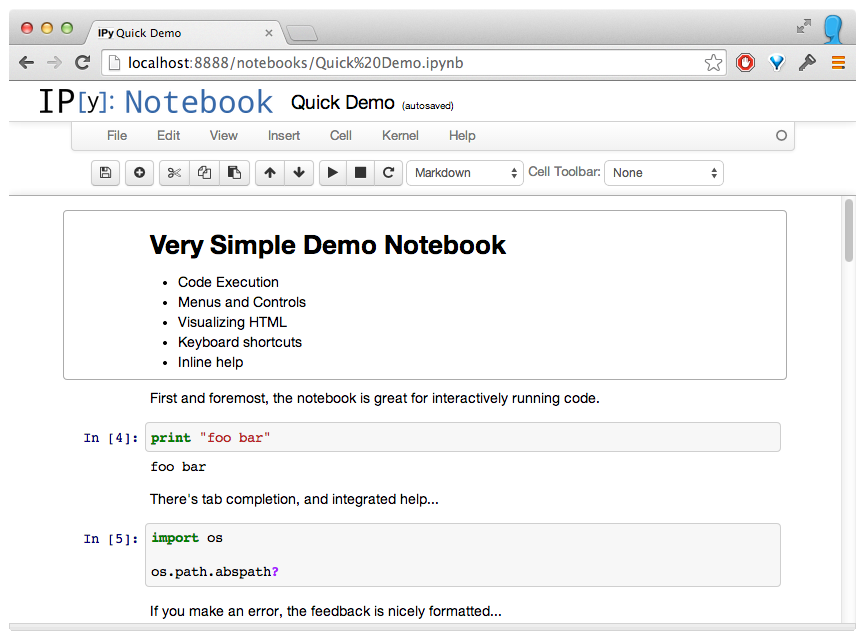

Browser Launches

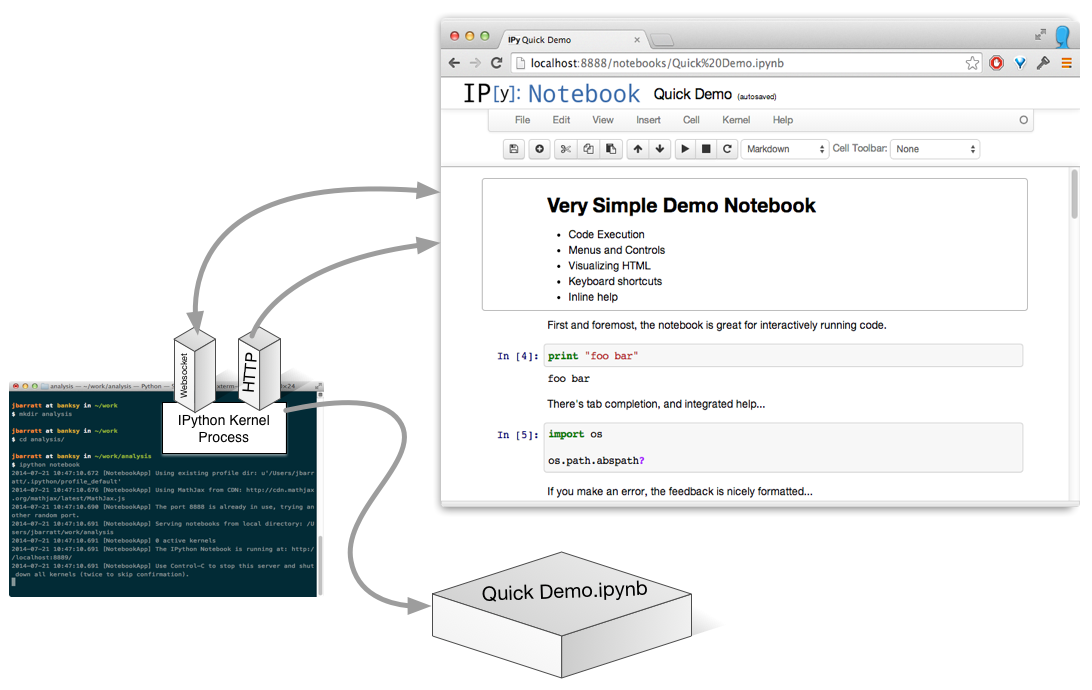

Build a notebook

(Actually, it's a process per open notebook.)

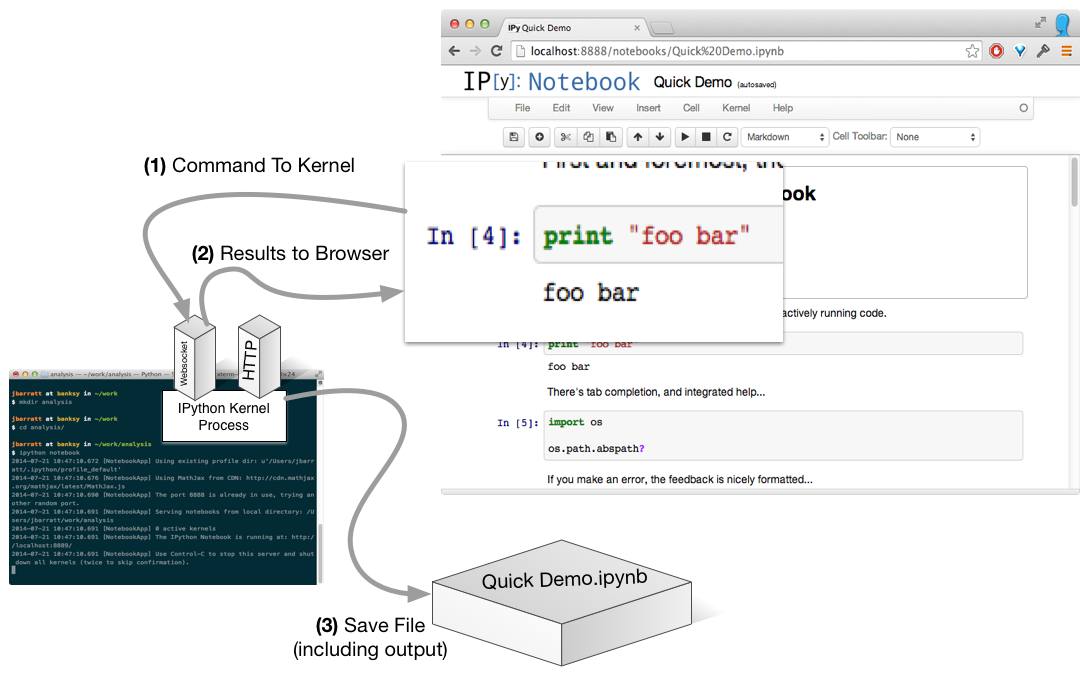

Run A Cell

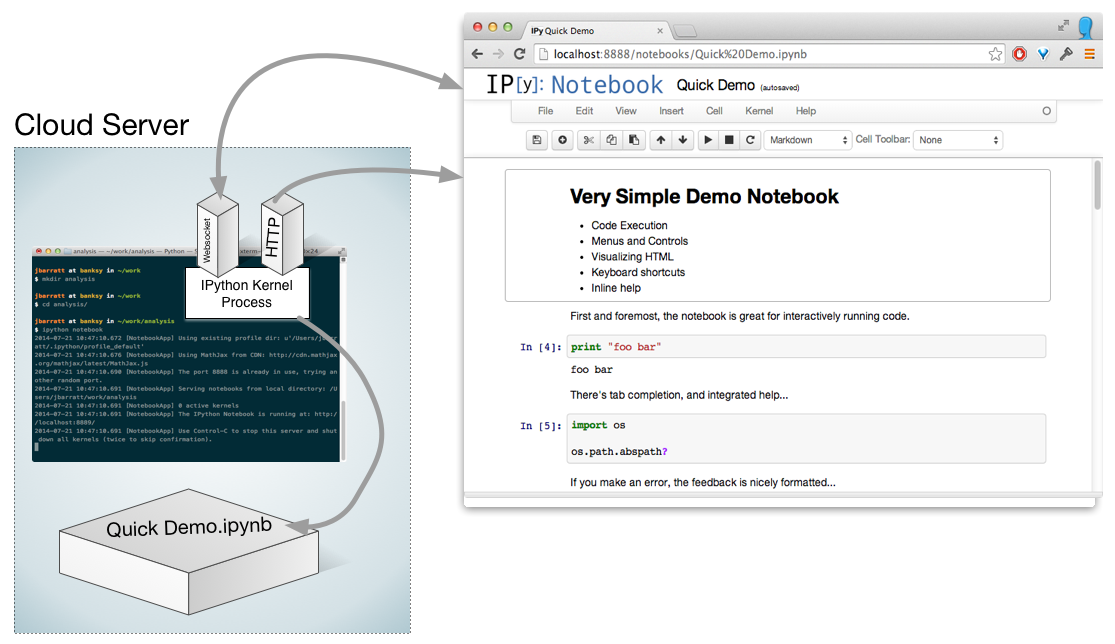

No Need To Be Local...

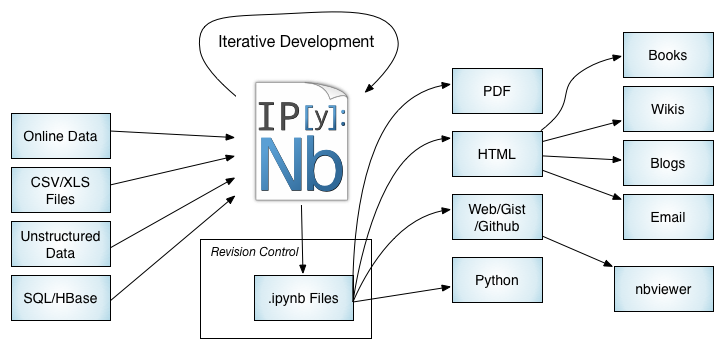

Notebook Workflows: The Big Picture

Not covered today but cool; clustering capabilities

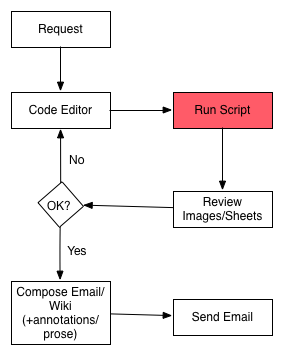

How I Fell: Report Workflow v1

Problems:

- slow (read whole data file each time, lots of context switching)

- version controlled analysis, but not commentary, difficult to 'go back to'

- Automating requires non-trivial additional dev

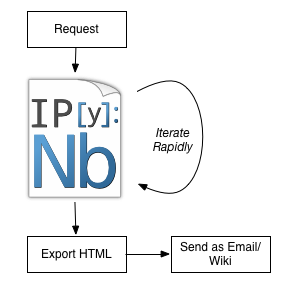

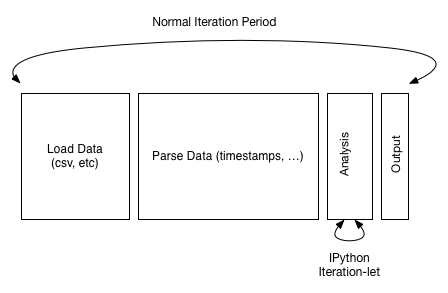

Report Workflow now()

Speedups primarily from no context switching, interactivity, and reusable data loading.

Reproducible, literate, annotatable, auditable.

Iterative Coding Example

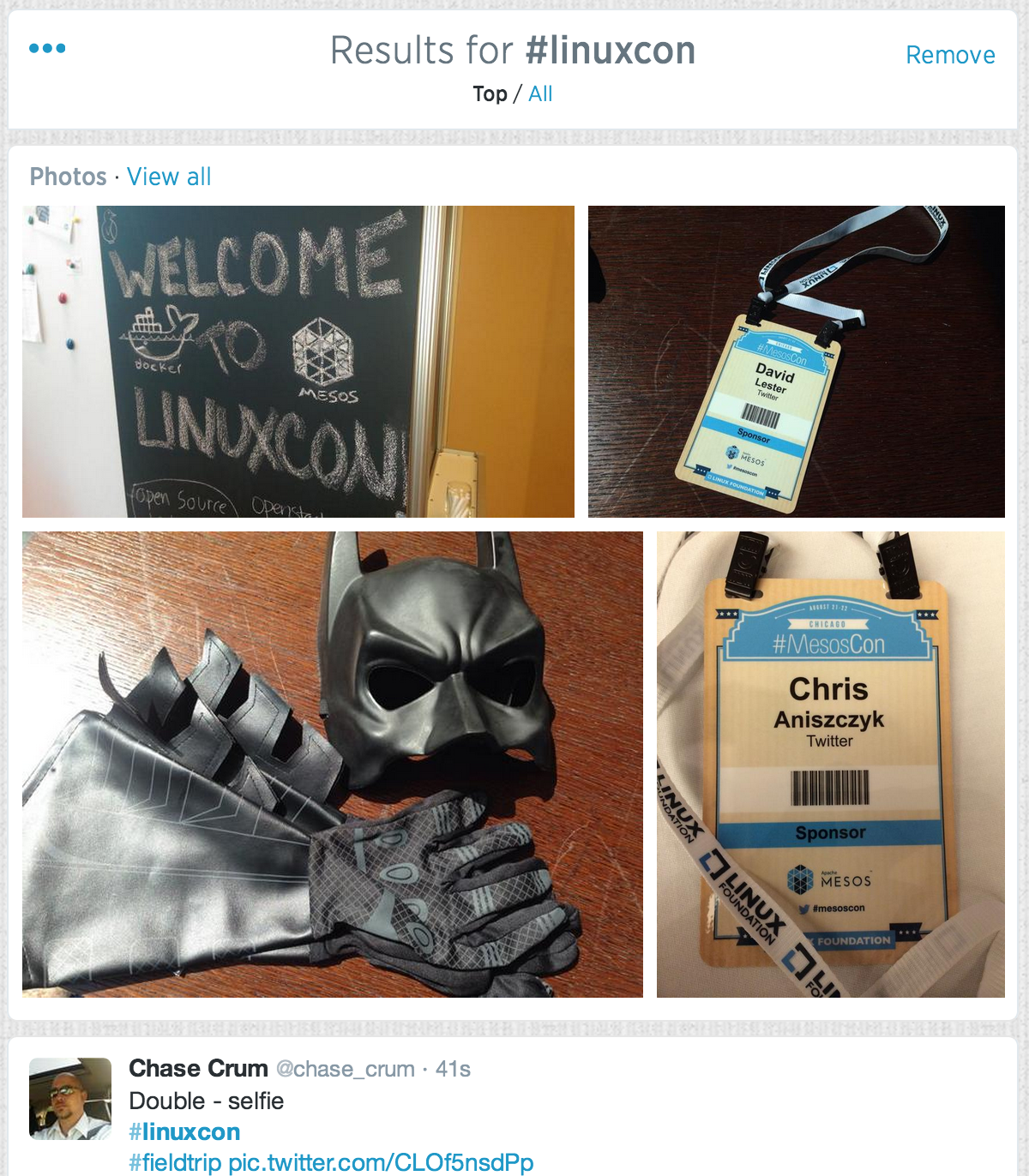

"What are the most popular links being tweeted about #linuxcon?"

Interactive Example: Sign in

import json

import twitter

creds = json.load(open('/Users/jbarratt/.twitter.json'))

auth = twitter.oauth.OAuth(creds['access_token'],

creds['access_token_secret'],

creds['api_key'],

creds['api_secret'])

twitter_api = twitter.Twitter(auth=auth)

Interactive Example: Search for '#linuxcon'

search_results = twitter_api.search.tweets(q='#linuxcon', count=5000)

statuses = search_results['statuses']

print len(statuses)

# Original source for this loop:

# http://nbviewer.ipython.org/github/ptwobrussell/Mining-the-Social-Web-2nd-Edition/blob/master/ipynb/Chapter%201%20-%20Mining%20Twitter.ipynb

for _ in range(5):

try:

next_results = search_results['search_metadata']['next_results']

except KeyError, e: # No more results when next_results doesn't exist

break

kwargs = dict([ kv.split('=') for kv in next_results[1:].split("&") ])

search_results = twitter_api.search.tweets(**kwargs)

statuses += search_results['statuses']

100

print len(statuses)

200

Useful things to note

- Now we have a

statuseslist that's stored in our kernel. No hitting twitter rate limits. - I got that code from the mining the social web book, but I have no idea what to do next. We can explore.

Interactive Example: Inspect Results

# We know the results are in 'statuses', let's peek at one.

print json.dumps(statuses[1], indent=1)

{

"contributors": null,

"truncated": false,

"text": "RT @TechJournalist: the world\u2019s first 3D car #linuxcon http://t.co/bDMkG6lgQX",

"in_reply_to_status_id": null,

"id": 502283402863980545,

"favorite_count": 0,

"source": "<a href=\"http://twitter.com/download/android\" rel=\"nofollow\">Twitter for Android</a>",

"retweeted": false,

"coordinates": null,

"entities": {

"symbols": [],

"user_mentions": [

{

"id": 15488482,

"indices": [

3,

18

],

"id_str": "15488482",

"screen_name": "TechJournalist",

"name": "Sean Kerner"

}

],

"hashtags": [

{

"indices": [

45,

54

],

"text": "linuxcon"

}

],

"urls": [],

"media": [

{

"source_status_id_str": "502221932486733824",

"expanded_url": "http://twitter.com/TechJournalist/status/502221932486733824/photo/1",

"display_url": "pic.twitter.com/bDMkG6lgQX",

"url": "http://t.co/bDMkG6lgQX",

"media_url_https": "https://pbs.twimg.com/media/BvhAL6SIAAA3vfG.jpg",

"source_status_id": 502221932486733824,

"id_str": "502221931819827200",

"sizes": {

"small": {

"h": 255,

"resize": "fit",

"w": 340

},

"large": {

"h": 480,

"resize": "fit",

"w": 640

},

"medium": {

"h": 450,

"resize": "fit",

"w": 600

},

"thumb": {

"h": 150,

"resize": "crop",

"w": 150

}

},

"indices": [

55,

77

],

"type": "photo",

"id": 502221931819827200,

"media_url": "http://pbs.twimg.com/media/BvhAL6SIAAA3vfG.jpg"

}

]

},

"in_reply_to_screen_name": null,

"in_reply_to_user_id": null,

"retweet_count": 3,

"id_str": "502283402863980545",

"favorited": false,

"retweeted_status": {

"contributors": null,

"truncated": false,

"text": "the world\u2019s first 3D car #linuxcon http://t.co/bDMkG6lgQX",

"in_reply_to_status_id": null,

"id": 502221932486733824,

"favorite_count": 2,

"source": "<a href=\"https://about.twitter.com/products/tweetdeck\" rel=\"nofollow\">TweetDeck</a>",

"retweeted": false,

"coordinates": null,

"entities": {

"symbols": [],

"user_mentions": [],

"hashtags": [

{

"indices": [

25,

34

],

"text": "linuxcon"

}

],

"urls": [],

"media": [

{

"expanded_url": "http://twitter.com/TechJournalist/status/502221932486733824/photo/1",

"display_url": "pic.twitter.com/bDMkG6lgQX",

"url": "http://t.co/bDMkG6lgQX",

"media_url_https": "https://pbs.twimg.com/media/BvhAL6SIAAA3vfG.jpg",

"id_str": "502221931819827200",

"sizes": {

"small": {

"h": 255,

"resize": "fit",

"w": 340

},

"large": {

"h": 480,

"resize": "fit",

"w": 640

},

"medium": {

"h": 450,

"resize": "fit",

"w": 600

},

"thumb": {

"h": 150,

"resize": "crop",

"w": 150

}

},

"indices": [

35,

57

],

"type": "photo",

"id": 502221931819827200,

"media_url": "http://pbs.twimg.com/media/BvhAL6SIAAA3vfG.jpg"

}

]

},

"in_reply_to_screen_name": null,

"in_reply_to_user_id": null,

"retweet_count": 3,

"id_str": "502221932486733824",

"favorited": false,

"user": {

"follow_request_sent": false,

"profile_use_background_image": true,

"default_profile_image": false,

"id": 15488482,

"profile_background_image_url_https": "https://abs.twimg.com/images/themes/theme9/bg.gif",

"verified": false,

"profile_text_color": "666666",

"profile_image_url_https": "https://pbs.twimg.com/profile_images/422904373/vulcan_salute_bigger_normal.png",

"profile_sidebar_fill_color": "252429",

"entities": {

"url": {

"urls": [

{

"url": "http://t.co/zA94vcOwI7",

"indices": [

0,

22

],

"expanded_url": "http://news.google.com/news?hl=en&ned=us&q=%22sean+michael+kerner%22&ie=UTF-8&scorin",

"display_url": "news.google.com/news?hl=en&ned\u2026"

}

]

},

"description": {

"urls": []

}

},

"followers_count": 7557,

"profile_sidebar_border_color": "181A1E",

"id_str": "15488482",

"profile_background_color": "1A1B1F",

"listed_count": 401,

"is_translation_enabled": false,

"utc_offset": -14400,

"statuses_count": 21117,

"description": "IT consultant, technology user, tinkerer and sometimes Klingon",

"friends_count": 1210,

"location": "online",

"profile_link_color": "2FC2EF",

"profile_image_url": "http://pbs.twimg.com/profile_images/422904373/vulcan_salute_bigger_normal.png",

"following": false,

"geo_enabled": true,

"profile_background_image_url": "http://abs.twimg.com/images/themes/theme9/bg.gif",

"screen_name": "TechJournalist",

"lang": "en",

"profile_background_tile": false,

"favourites_count": 2548,

"name": "Sean Kerner",

"notifications": false,

"url": "http://t.co/zA94vcOwI7",

"created_at": "Sat Jul 19 00:55:42 +0000 2008",

"contributors_enabled": false,

"time_zone": "Eastern Time (US & Canada)",

"protected": false,

"default_profile": false,

"is_translator": false

},

"geo": null,

"in_reply_to_user_id_str": null,

"possibly_sensitive": false,

"lang": "en",

"created_at": "Wed Aug 20 22:33:34 +0000 2014",

"in_reply_to_status_id_str": null,

"place": null,

"metadata": {

"iso_language_code": "en",

"result_type": "recent"

}

},

"user": {

"follow_request_sent": false,

"profile_use_background_image": true,

"default_profile_image": false,

"id": 9463342,

"profile_background_image_url_https": "https://abs.twimg.com/images/themes/theme15/bg.png",

"verified": false,

"profile_text_color": "333333",

"profile_image_url_https": "https://pbs.twimg.com/profile_images/3360362988/bffebd957a5d9a2c34fa90307db35330_normal.jpeg",

"profile_sidebar_fill_color": "C0DFEC",

"entities": {

"description": {

"urls": []

}

},

"followers_count": 218,

"profile_sidebar_border_color": "A8C7F7",

"id_str": "9463342",

"profile_background_color": "022330",

"listed_count": 25,

"is_translation_enabled": false,

"utc_offset": -14400,

"statuses_count": 3090,

"description": "Suffers from skiing addiction; Director of Strategic Programs at The Linux Foundation. Did I mention also slightly addicted to technology?",

"friends_count": 314,

"location": "",

"profile_link_color": "0084B4",

"profile_image_url": "http://pbs.twimg.com/profile_images/3360362988/bffebd957a5d9a2c34fa90307db35330_normal.jpeg",

"following": false,

"geo_enabled": false,

"profile_banner_url": "https://pbs.twimg.com/profile_banners/9463342/1398310027",

"profile_background_image_url": "http://abs.twimg.com/images/themes/theme15/bg.png",

"screen_name": "mdolan",

"lang": "en",

"profile_background_tile": false,

"favourites_count": 89,

"name": "Mike Dolan",

"notifications": false,

"url": null,

"created_at": "Mon Oct 15 21:10:59 +0000 2007",

"contributors_enabled": false,

"time_zone": "Eastern Time (US & Canada)",

"protected": false,

"default_profile": false,

"is_translator": false

},

"geo": null,

"in_reply_to_user_id_str": null,

"possibly_sensitive": false,

"lang": "en",

"created_at": "Thu Aug 21 02:37:49 +0000 2014",

"in_reply_to_status_id_str": null,

"place": null,

"metadata": {

"iso_language_code": "en",

"result_type": "recent"

}

}

Interactive Example: Link Extraction Attempt

import re # important note; this is common practice in notebooks, but violates PEP8

# "Imports are always put at the top of the file, just after any

# module comments and docstrings, and before module globals and constants."

# Yes, this is a terrible way to find URL-like strings.

re.findall(r'(https?://\S*)', statuses[0]['text'])

[u'http://t.co/e4Z5rlGpLb']

That looks plausible. Let's try applying that to all our results.

urls = []

for status in statuses:

urls += re.findall(r'(https?://\S*)', status['text'])

urls[0:10]

[u'http://t.co/e4Z5rlGpLb', u'http://t.co/bDMkG6lgQX', u'http://t.co/CLOf5nsdPp', u'http://t.co/sp1g2oWfHB', u'http://t.co/OxrSYOrm6S', u'http://t.\u2026', u'http://t.co/dMikYBhbpd', u'http://t.co/tMyCAxBwyS),', u'http://t.co/26nvyxuH30', u'http://t.co/sf6p5\u2026']

Huh, not great, if we use the text it looks like things get truncated. (\u2026 is … in unicode, what you see when a tweet trails off.)

Looking at the JSON again, it looks like a lot of these have ['entities']['urls'][(list)]['expanded_url'], let's try for those.

Interactive Example: Link Extraction Take Two

urls = []

for status in statuses:

try:

urls += [x['expanded_url'] for x in status['entities']['urls']]

except:

pass

urls[0:5]

[u'http://www.slideshare.net/jpetazzo/docker-linux-containers-lxc-and-security', u'http://bit.ly/1vjbwEV', u'http://www.brendangregg.com/linuxperf.html', u'http://www.slideshare.net/aimeemaree/firefoxos-and-its-use-of-linux-a-deep-dive-into-gonk-architecture', u'http://instagram.com/p/r8WieND4Jc/']

Great! No more unicode weirdness. But, those shortened links are still redirects. Can we resolve them?

Interactive Example: Redirect Resolution

import requests

rv = requests.get('http://bit.ly/1vjbwEV')

rv.url

u'http://www.linuxtoday.com/developer/what-the-linux-foundation-does-for-linux-linuxcon.html'

Handy. Turns out if you get something with requests you can just access the .url property and find what it got after follwing all the redirects.

Interactive Example: Counting URL's

from collections import Counter

import requests

# collections.Counter is a handy way to find 'Top N'

popular = Counter()

# Don't need to look up the same short link twice.

cache = {}

for url in urls:

if url in cache:

popular[cache[url]] += 1

else:

try:

rv = requests.get(url)

# resolve the original URL

cache[url] = rv.url

popular[rv.url] += 1

except:

# ignore anything bad that happens

pass

Interactive Example: Results

popular.most_common(10)

[(u'http://www.brendangregg.com/linuxperf.html', 28), (u'http://www.slideshare.net/jpetazzo/docker-linux-containers-lxc-and-security', 15), (u'http://www.slideshare.net/aimeemaree/firefoxos-and-its-use-of-linux-a-deep-dive-into-gonk-architecture', 9), (u'http://lccona14.sched.org/event/8e7a38ff932af2546adf72fe77dd0374', 7), (u'http://www.zdnet.com/linus-torvalds-still-wants-the-linux-desktop-7000032805/', 6), (u'http://www.linuxtoday.com/developer/what-the-linux-foundation-does-for-linux-linuxcon.html', 2), (u'http://instagram.com/p/r8JiCXjU82/', 1), (u'http://www.linuxtoday.com/developer/thanks-for-making-games-faster-top-10-quotes-from-the-linux-kernel-developer-panel-linuxcon.html', 1), (u'http://instagram.com/p/r8WieND4Jc/', 1), (u'http://instagram.com/p/r8K8VHD4IC/', 1)]

Why was that cool?

- Interactive & Exploratory.

- Scroll back up, re-review JSON, go another route

- Cached all the things

- Not hitting twitter a bunch (rate limits, etc)

- Static data set (not changing every time you run the code)

- Can even keep developing while on conference wifi (oohhhhhh)

- Easy to keep around as a log for future experiments

- Easy to take that learning and 'bake' it into something more permanent

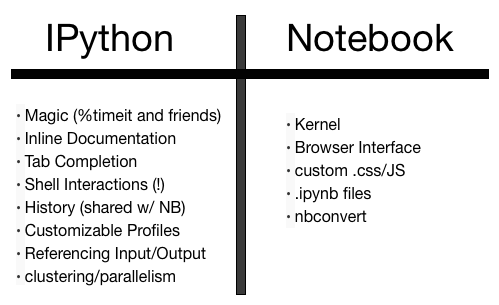

The "IPython" in "IPython Notebook"`: Interactive Python

The Future

Skills port to the REPL

$ ipython

Python 2.7.6 (default, Jan 28 2014, 10:24:42)

Type "copyright", "credits" or "license" for more information.

IPython 3.0.0-dev -- An enhanced Interactive Python.

? -> Introduction and overview of IPython's features.

%quickref -> Quick reference.

help -> Python's own help system.

object? -> Details about 'object', use 'object??' for extra details.

In [1]: import webbrowser

In [2]: webbrowser.

webbrowser.BackgroundBrowser webbrowser.MacOSX webbrowser.open_new_tab

webbrowser.BaseBrowser webbrowser.MacOSXOSAScript webbrowser.os

webbrowser.Chrome webbrowser.Mozilla webbrowser.register

webbrowser.Chromium webbrowser.Netscape webbrowser.register_X_browsers

webbrowser.Elinks webbrowser.Opera webbrowser.shlex

webbrowser.Error webbrowser.UnixBrowser webbrowser.stat

webbrowser.Galeon webbrowser.get webbrowser.subprocess

webbrowser.GenericBrowser webbrowser.main webbrowser.sys

webbrowser.Grail webbrowser.open webbrowser.time

webbrowser.Konqueror webbrowser.open_new

Interactive Gotcha: Single Namespace

As you recall:

So what happens when you do...

x = 5

x

5

# I ran this cell a few times

x += 1

x

12

IPython Magic: Development Powertools

Which method is faster?

import random, string

# make a big list of random strings

words = [''.join(random.choice(string.ascii_uppercase) for _ in range(6)) for _ in range(1000)]

# Plan A: turn them all into lowercase with a list comprehension

def listcomp_lower(words):

return [w.lower() for w in words]

# Plan B: Start with a list, and word by word append the lowercase versions

def append_lower(words):

new = []

for w in words:

new.append(w.lower())

return new

# %timeit is IPython Magic to do a quick benchmark

%timeit append_lower(words)

1000 loops, best of 3: 249 µs per loop

%timeit listcomp_lower(words)

10000 loops, best of 3: 179 µs per loop

%lsmagic

Available line magics: %alias %alias_magic %autocall %automagic %autosave %bookmark %cat %cd %clear %colors %config %connect_info %cp %debug %dhist %dirs %doctest_mode %ed %edit %env %gui %hist %history %install_default_config %install_ext %install_profiles %killbgscripts %ldir %less %lf %lk %ll %load %load_ext %loadpy %logoff %logon %logstart %logstate %logstop %ls %lsmagic %lx %macro %magic %man %matplotlib %mkdir %more %mv %notebook %page %pastebin %pdb %pdef %pdoc %pfile %pinfo %pinfo2 %popd %pprint %precision %profile %prun %psearch %psource %pushd %pwd %pycat %pylab %qtconsole %quickref %recall %rehashx %reload_ext %rep %rerun %reset %reset_selective %rm %rmdir %run %save %sc %store %sx %system %tb %time %timeit %unalias %unload_ext %who %who_ls %whos %xdel %xmode Available cell magics: %%! %%HTML %%SVG %%bash %%capture %%debug %%file %%html %%javascript %%latex %%perl %%prun %%pypy %%python %%python2 %%python3 %%ruby %%script %%sh %%svg %%sx %%system %%time %%timeit %%writefile Automagic is ON, % prefix IS NOT needed for line magics.

Don't Panic

%%writefile?

%writefile [-a] filename

Write the contents of the cell to a file.

Exporting

ipynb format is clean, readable JSON, which inlines any output results, including base64'd images.

...

{

"cell_type": "markdown",

"metadata": {

"slideshow": {

"slide_type": "slide"

}

},

"source": [

"# Magic can be magical"

]

},

...

Great Notebook Use Cases

There are many use cases where the notebook makes a lot of sense to use. Here are a few illustrated examples:

- Code Mentorship

- Documentation/Runbooks

- Data Normalization (+ Inline Error Resolution)

- Data Analysis, Portland Example

- Web Logs

- Blogging

- Wiki'ing...

We won't go into them all for time, but a few highlights:

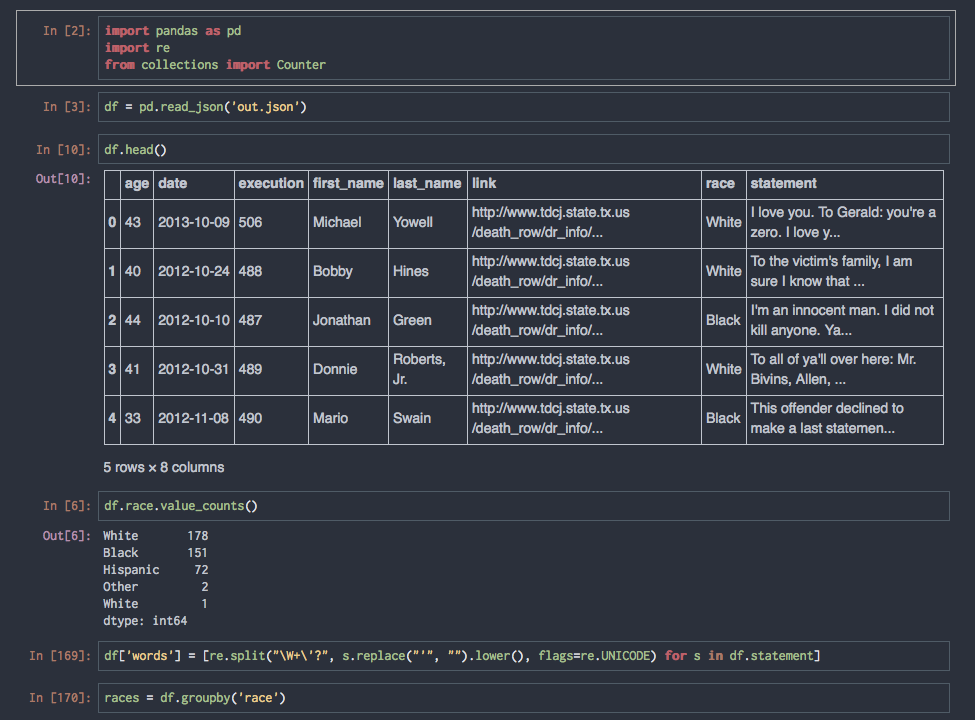

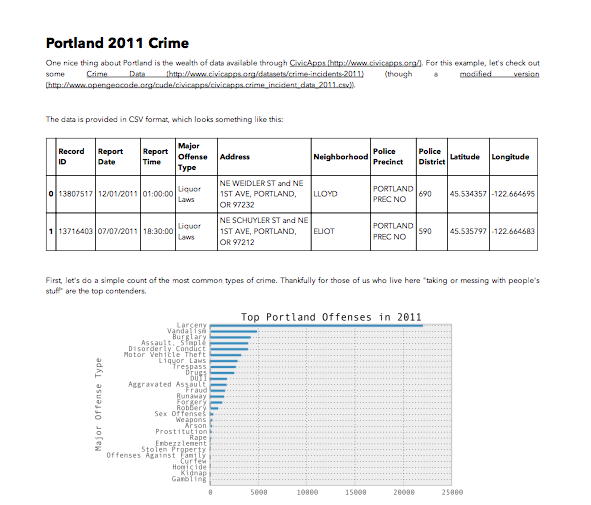

Use Case: Data Analysis

This is the gateway drug that gets many people into IPython Notebook. It's the real sweet spot between what makes Python great (pandas, scikit*, numpy, matplotlib, etc) and IPython Notebook great (Literate, Visual, Interactive, Iterative.)

Did I permanenently ruin your ability to hear the term 'big data' without thinking of this? You're welcome.

Use Case: Wiki Publishing

Also can work for HTML emails, etc.

Use Case: Blogging

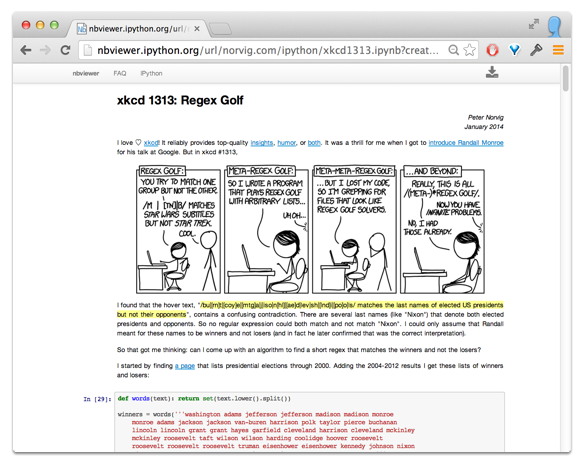

When the guy who wrote my AI Textbook uses it, you know it's good software!

Clearing up the clutter

Lots of the slides had more code than we might want in a report; several approaches. It's on the notebook roadmap to add an 'official' way to do this.

%runmagic runs another notebook, pulling variables in- Move code to a local module (

ipython nbconvert --to python& refactor) or build a real module (Tip:%load_ext autoreload; %autoreload 2or%aimport mymodule) - Use a custom output template

- Easiest: Hide cells with a

custom.css. (Annoying caveat!)

/* Boss Mode */

div.input {

display: none;

}

div.output_prompt {

display: none;

}

div.output_text {

display: none;

}

Boss Mode HTML -> PDF Output

Customized Displays

Hey, we have HTML to play with! There are many ways to display prettier things inline.

- ipy_table does nice HTML Table display of list/tab data which isn't worth putting into pandas.

- IPython Display System covers many more capabilities in detail

Simple Custom HTML:

from IPython.core.display import HTML

def foo():

raw_html = "<h1>Yah, rendered HTML</h1>"

return HTML(raw_html)

Rich Objects

You can also define additional __repr__()-type methods on custom objects.

This has all kinds of fun possibilities.

_repr_html_(), svg, png, jpeg, html, javascript, latex.

class FancyText(object):

def __init__(self, text):

self.text = text

def _repr_html_(self):

""" Use some fancy CSS3 styling when we return this """

style=("text-shadow: 0 1px 0 #ccc,0 2px 0 #c9c9c9,0 3px 0 #bbb,"

"0 4px 0 #b9b9b9,0 5px 0 #aaa,0 6px 1px rgba(0,0,0,.1)")

return '<h1 style="{}">{}</h1>'.format(style, self.text)

FancyText("Hello #linuxcon!")

Hello #linuxcon!

Automated Testing

Many options for testing, nothing too formal yet. These could easily be run by Travis/Jenkins/...

$ ./checkipnb.py xkcd1313.ipynb

running xkcd1313.ipynb

.........

FAILURE:

def test1():

assert subparts('^it$') == {'^', 'i', 't', '$', '^i', 'it', 't$', '^it', 'it$', '^it$'}

test1()

-----

raised:

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-11-a4492b0ec0d5> in <module>()

---> 26 test1()

<ipython-input-11-a4492b0ec0d5> in test1()

22 assert words('This is a TEST this is') == {'this', 'is', 'a', 'test'}

---> 23 assert lines('Testing / 1 2 3 / Testing over') == {'TESTING', '1 2 3', 'TESTING OVER'}

NameError: global name 'lines' is not defined

.............

ran notebook

ran 22 cells

1 cells raised exceptions

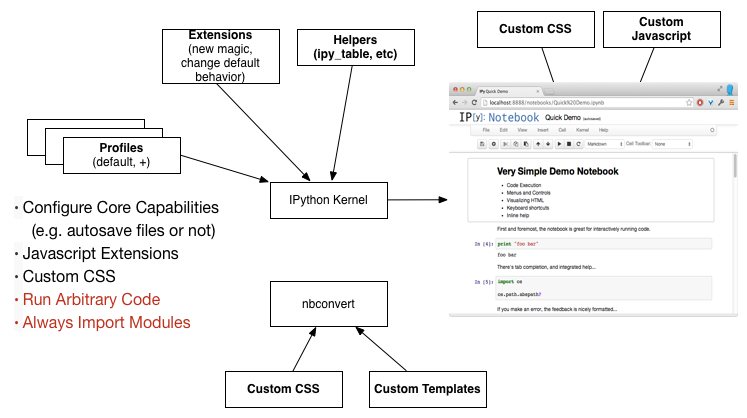

IPython (& Notebook) Customization

See more on Profiles, Javascript Extensions, IPython Extensions, and nbconvert Templates

profile = !ipython locate profile

print profile

custom_js = profile[0] + "/static/custom/custom.js"

print custom_js

!head $custom_js

['/Users/jbarratt/.ipython/profile_default'] /Users/jbarratt/.ipython/profile_default/static/custom/custom.js // leave at least 2 line with only a star on it below, or doc generation fails /** * * * Placeholder for custom user javascript * mainly to be overridden in profile/static/custom/custom.js * This will always be an empty file in IPython * * User could add any javascript in the `profile/static/custom/custom.js` file * (and should create it if it does not exist).

Thankfully you can organize them in unique files, and just require them in custom.js

$([IPython.events]).on('app_initialized.NotebookApp', function(){

require(['/static/custom/clean_start.js']);

require(['/static/custom/styling/css-selector/main.js']);

})

Javascript, Huh, What is it good for

Customizing the UI

IPython.toolbar.add_buttons_group([

{

id : 'toggle_codecells',

label : 'Toggle codecell display',

icon : 'icon-list-alt',

callback : toggle

}

]);

And more...

Turns out, a lot! You can execute anything you can run in an IPython Notebook cell.

IPython.notebook.kernel.execute("!rm -rf /")

Demo Of a less scary example

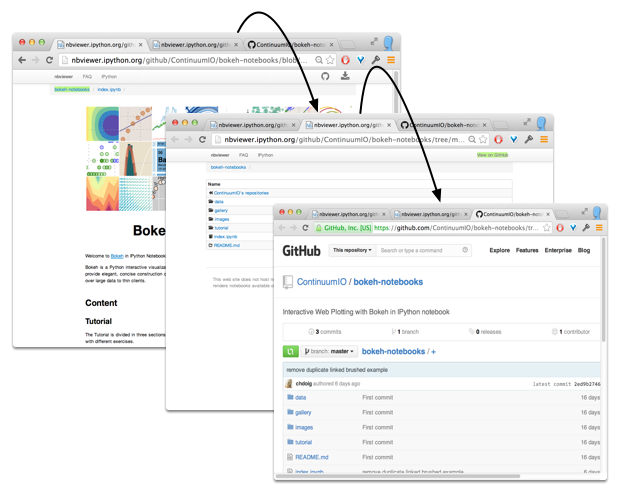

Sharing Notebooks

Personal Archive

One useful thing with having lots of notebooks around is high context sample code for solving future problems.

I wrote a simple tool (only works on OSX for now, yikes): nbgrep

!nbgrep seaborn

/Users/jbarratt/work/ipn2/Graphite Time Series.ipynb: import seaborn as sns /Users/jbarratt/work/ipython_notebook_presentation/Graphite Time Series.ipynb: import seaborn as sns /Users/jbarratt/work/mt/hash_buckets/host_hashing.ipynb: import seaborn as sns /Users/jbarratt/work/notebookcookbook/Graphite Time Series.ipynb: import seaborn as sns /Users/jbarratt/work/notebookcookbook/ipython_notebook_presentation/Graphite Time Series.ipynb: import seaborn as sns

Oh, one more thing

IT CAME FROM INSIDE THE NOTEBOOK

- Highly technical decks can be created quickly

- Collaboration features are still quite useful

- Check It Out

Building slides

- Turn on the 'slideshow' cell toolbar

- Types:

- Slide: start a new slide

-: Continue a slide- Sub-Slide: Make a 'down' slide

- Fragment: Make a 'bullet' type incoming slide

- Skip: keep in the notebook, not the deck

- Notes: speaker notes

!ipython nbconvert Presentation.ipynb --to slides

[NbConvertApp] Using existing profile dir: u'/Users/jbarratt/.ipython/profile_default' [NbConvertApp] Converting notebook 00 Presentation.ipynb to slides [NbConvertApp] Support files will be in 00 Presentation_files/ [NbConvertApp] Loaded template slides_reveal.tpl [NbConvertApp] Writing 188767 bytes to 00 Presentation.slides.html

Other Resources

Try It Online

Installing

$ pip install ipython[all](brew install python) OR- Anaconda OR

- docker-ipython

- Preloaded with lots of sometimes challenging-to-install packages like Pattern, NLTK, Pandas, NumPy, SciPy, Numba, Biopython...

Learning More

- Slides & example notebooks will be up on the OSCON site later.

- A Gallery of Interesting IPython Notebooks

- Extensions

- nbviewer (good way to discover organically)

- Pandas/numpy, Statsmodels, Matplotlib, bokeh, vincent, scikit-learn, scikit-image, .... (F150!)

- Talk to me! @jbarratt on twitter,

jbarratt@serialized.net

Credits

- Quill designed by Simple Icons from the Noun Project,

- Settings designed by Clément thorez from the Noun Project,

- Photo designed by Simple Icons from the Noun Project,

- Recurring Edit designed by Lemon Liu from the Noun Project

- Cover page texture from grungetextures via Flickr.